From Copy-Paste to Autonomous Coding Agents: A Subtle Introduction

Hello, my name is Taeseo Um, currently on the ML team at HyperAccel.

There is a running joke among developers that our job has always been about googling the issue, clicking the first link that pops up, then copying from Stack Overflow. In some ways, the arrival of AI coding tools didn’t change what we do — it just made us dramatically better at it. Over the past few months, our team has ridden almost every wave of AI-assisted development, from the earliest interactions to the autonomous agents we rely on today. Here’s what that journey looked like, what we learned, and where we think it’s all heading.

The Early Days: AI as a Smarter Search Engine

When large language models first became accessible to the world, nobody had a playbook, especially developers. The general mass treated LLMs in various ways: friend, teacher, personal therapist, a generic search engine. What about developers? The initial use case was embarrassingly simple: copy an error message, paste it into ChatGPT, and hope for a useful answer. It was Stack Overflow with a conversational interface, and honestly, it worked surprisingly well. Instead of scrolling through forum threads to find the one answer that matched your exact Python version, you could get a tailored explanation in seconds.

From there, usage naturally expanded. Developers started asking syntax questions, requesting code snippets, and getting explanations of unfamiliar APIs. The mental model was still very much “I have this specific question, give me an answer that matches what I need.” But a subtle shift was already underway. Once people realized these models could hold context, they started feeding in larger chunks of code — sometimes entire files — and asking higher-level questions about architecture, design patterns, and debugging strategies. The AI wasn’t writing all our code yet, but it was becoming a surprisingly competent pair-programming partner (one where I don’t feel embarrassed asking dumb questions to).

Tab Complete Changed Everything

The real inflection point came with tools like GitHub Copilot and Cursor. Autocomplete had existed in IDEs for decades, but this was fundamentally different. Instead of suggesting variable names and method signatures, these tools were predicting entire blocks of logic. You’d start typing something, maybe even just a comment, and the rest would appear like magic. Press tab. Accept. Move on. Forget.

It’s hard to overstate how much this changed the rhythm of coding. Writing boilerplate, repetitive patterns, and standard implementations went from minutes to seconds. Developers got faster, and perhaps more importantly, they stayed in flow longer. The friction of context-switching to documentation or search engines basically vanished.

Then the agentic experience arrived. Tools like Cursor evolved beyond simple autocomplete into something more ambitious. You could describe a problem in natural language, and the LLM / IDE would traverse your codebase, identify the relevant files, propose changes across multiple locations, and ask you to review and accept. The developer’s role shifted from writing code to directing and reviewing it. It felt like having a junior engineer who could read your entire repository in milliseconds.

CLI Agents and the Rise of Structured AI Workflows

As powerful as IDE-integrated tools were, we found they still lacked structure. When Cursor introduced the concept of rules — markdown files that defined coding standards, commenting conventions, and general information — we jumped on it. We added rule files to nearly every repository so that anyone using AI-assisted tooling would at least produce code that followed our team’s standards. It was a simple idea with substantial impact.

The next leap was MCPs (Model Context Protocols), which let AI tools connect to external data sources. Suddenly, an agent could pull context from our Confluence documentation, understand our internal documents, and reference design decisions that lived outside the codebase. The AI wasn’t just reading code anymore — it was reading our institutional knowledge.

However, what truly attached a rocket booster to our team’s “let’s improve productivity with AI” mindset was Claude Code. Claude Code introduced the concept of skills, which go far beyond static rules and instead enable structured, multi-step workflows.

In one of our team repositories, we now maintain a .claude directory to manage skills for planning, feature implementation, writing tests, opening PRs, fetching and incorporating PR review comments, and more. We have also embedded platform-specific hardware constraints, anti-patterns our team learned the hard way, and SDK-specific conventions into either skills or Claude Code rules.

We will cover in depth how we designed our Claude Code skills files and how we optimized other Claude Code use cases in future blog posts.

The difference between rule files and skills is similar to the difference between a style guide and a “trained teammate.” A rule says, “Use pytest, do not use unittest.” A skill says, “Write a test suite appropriate for this type of module, execute it, automatically fix failures up to five times if needed, and summarize the results in a report.”

Skills like /implement-tested, /pr-review, and /open-pr have transformed tasks that used to take hours into workflows that now take minutes under human supervision.

While we had previously experimented with using LLMs for code generation, most approaches were limited to a “single prompt–single response” pattern. Claude Code’s skills allowed us to structure the entire execution flow — from planning → implementation → testing → fixing → reporting — into reusable units. In particular, they significantly reduced the cognitive load of repetitive but mentally taxing tasks, which made a tangible difference in our day-to-day productivity.

The Code Review Bottleneck

Here’s the part nobody warned us about. AI and agentic tools made developers dramatically faster at writing code. Pull requests that used to take a day were being opened in an hour. But code review — the part that requires a human to carefully read, understand, and verify changes – didn’t get any faster. If anything, the bottleneck got worse. The faster code was produced, the longer the review queue grew, and the overall speed of shipping features didn’t increase as much as we expected.

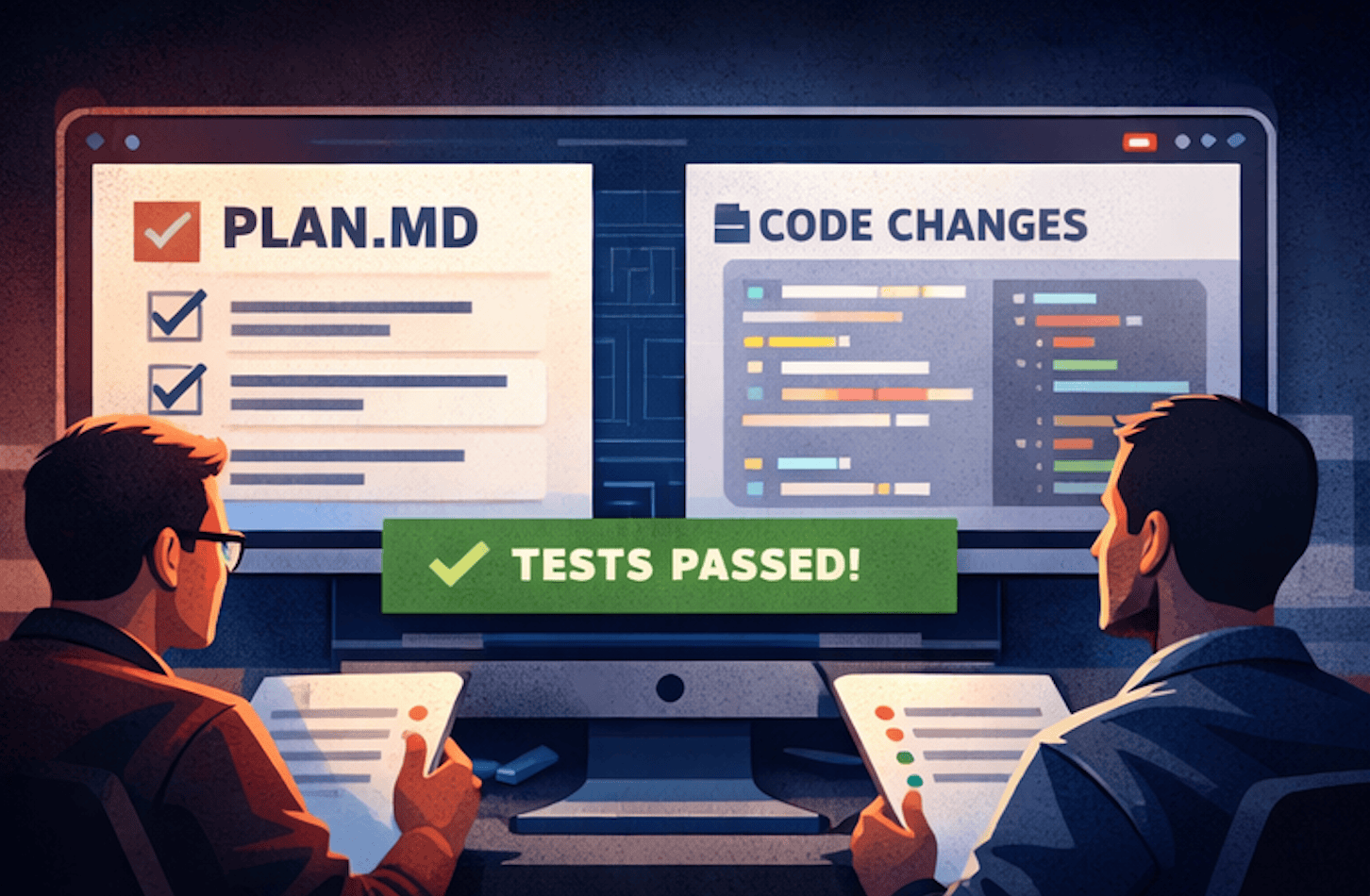

We started using AI for code review in GitHub PRs, and it helped catch surface-level issues. But the deeper question remained: how do you maintain quality at higher velocity? Our answer has been evolving. We’re moving toward a model where AI agents produce a plan.md file before writing any code — a structured document outlining the approach, the files to be changed, and the reasoning behind each decision. Human reviewers then review the plan rather than scrutinizing every line of the implementation. The key constraint is that the changed code must pass a comprehensive test suite. This shifts the burden of correctness from line-by-line review to test coverage, and it stresses the importance of writing excellent tests more than ever.

There’s a critical caveat to this approach: whenever someone creates new tests or modifies existing test code, that work must be human-reviewed. Tests are the contract that makes plan-based review trustworthy. If the tests are weak or wrong, the entire workflow model breaks down. So while we’re trying to gradually adopt this leaner review strategy for implementation code, test code remains firmly in the domain of careful human judgment.

What Does the Future Hold?

We’re still early. Not only our team, our company, but the entire software world trying to take advantage of these AI tools are still at the early stages to experience what these black boxes are truly capable of, at least that is my belief. The tools are improving monthly. You know what, no, they are improving daily. Whenever it seems like the AI workflow is stabilizing, there is always a shift. As developers, it is important to always keep an eye on these changes, always be ready to adapt. If you are not ready to adapt to these changes, you will be felt behind. You might be the best programmer in the world, but there will be someone who is a lot less talented than you in programming but knows just enough to fly past you just by knowing exactly how to take advantage of these AI tools. Our team is very open minded to AI tools and understands that this is the present and the future, and because of this collective belief, the speed of our team’s development is accelerating. We see a future where AI agents handle increasingly complex tasks and we are just there to make sure the thing that is getting done fast, is getting done right. People always talk about ‘this model’s context length’. That is important, yes. But as developers who are using these tools to do the dirty work for us, we need to be the ‘context masters’. The ones who fully get the big picture, down to the tiniest details of what needs to be done. The human role will continue shifting from writing and reviewing code toward defining intent, validating architecture, curating test suites, and making smart, fast, important judgement calls.

The teams that will thrive are the ones investing now in the infrastructure that makes this possible: well-structured skill definitions, comprehensive test coverage, clear architectural documentation, and a culture that treats AI tooling not as a shortcut but as a force multiplier that demands new kinds of discipline.

We’re not replacing developers. We’re redefining what it means to be one.

Upcoming…

This post was shared as an introduction outlining the overall direction and key questions we are exploring. In future posts, I plan to cover Skills in more depth, Kernel Agent(s), and other AI tool use cases, detailing not only how to use them but also how we designed and optimized them internally.

As the teams at HyperAccel continues to find new ways AI melts into our workflow, we will share our journey as we navigate this new paradigm of how we develop code and amazing software.

HyperAccel is Hiring!

The reason why we are writing operating this tech blog is largely too attract top talent!

If you are interest in the technologies we work with, and want to join this revolution, please apply at HyperAccel Career!

HyperAccel is full of brilliants engineers. We will be waiting.